Python is a versatile and powerful programming language that has gained immense popularity due to its simplicity, readability, and extensive libraries. One of the key advantages of Python is its ability to handle a wide range of data types and formats, making it an ideal choice for data analysis and manipulation. In this blog post, we will explore the world of Python data sets, understanding their importance, and learning how to work with them effectively.

Understanding Data Sets in Python

A data set, or dataset, is a collection of data that is organized and structured for analysis. It can contain various types of information, such as numerical values, text, dates, and more. Data sets are crucial for data-driven decision-making, as they provide the raw material for analysis, visualization, and machine learning tasks.

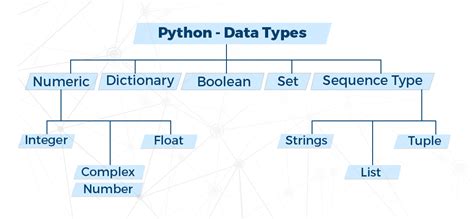

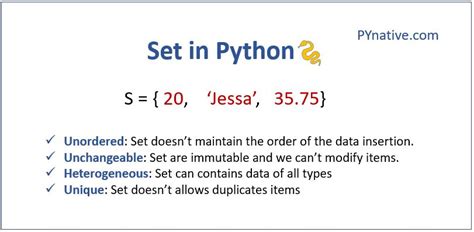

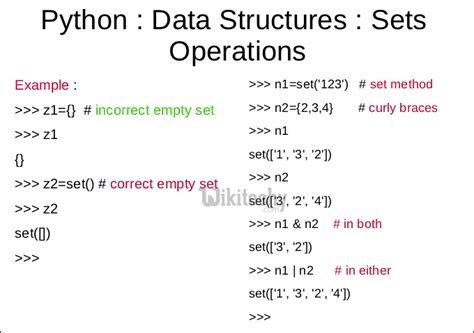

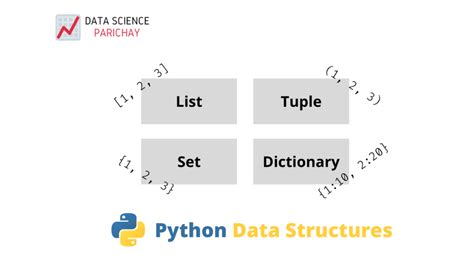

In Python, data sets can be represented using different data structures, such as lists, tuples, dictionaries, and pandas DataFrames. Each of these data structures has its own strengths and use cases, making Python a flexible tool for handling diverse data formats.

Working with Data Sets: A Step-by-Step Guide

Let's dive into the process of working with data sets in Python. We will cover the following steps:

- Importing data sets

- Exploring and understanding the data

- Data cleaning and preprocessing

- Data analysis and visualization

Step 1: Importing Data Sets

The first step in working with data sets is to import them into your Python environment. Python provides several libraries that make this process seamless. Here are some commonly used methods:

Using the csv Module

If your data set is in a CSV (Comma-Separated Values) format, you can use the csv module to read and parse the data. Here's an example:

import csv

# Open the CSV file

with open('data.csv', 'r') as file:

# Create a CSV reader object

csv_reader = csv.reader(file)

# Iterate through the rows and print each row

for row in csv_reader:

print(row)

Using the pandas Library

The pandas library is a powerful tool for data manipulation and analysis. It provides a DataFrame object, which is a two-dimensional, size-mutable, and heterogeneous data structure. You can use pandas to read various file formats, including CSV, Excel, and JSON.

import pandas as pd

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Display the first few rows of the DataFrame

print(df.head())

Other File Formats

Python offers libraries for handling other file formats as well. For example, the json module can be used to read and write JSON files, while the xml.etree.ElementTree module can parse XML data.

Step 2: Exploring and Understanding the Data

Once you have imported your data set, it's crucial to explore and understand its structure and content. This step helps you identify any issues or patterns in the data.

Basic Statistics

Calculating basic statistics, such as mean, median, and standard deviation, can give you an initial understanding of your data. Pandas DataFrames provide built-in methods for these calculations.

import pandas as pd

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Calculate basic statistics

print(df.describe())

Data Visualization

Visualizing your data can provide valuable insights and help you identify trends or outliers. Python offers several libraries for data visualization, with Matplotlib and Seaborn being popular choices.

import matplotlib.pyplot as plt

import seaborn as sns

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Create a histogram to visualize a numerical column

plt.hist(df['column_name'])

plt.title('Histogram of Column Name')

plt.xlabel('Value')

plt.ylabel('Frequency')

plt.show()

Step 3: Data Cleaning and Preprocessing

Raw data often contains missing values, duplicates, or inconsistent formatting. Data cleaning and preprocessing are essential steps to ensure the quality and consistency of your data set.

Handling Missing Values

Missing values can be handled in various ways, depending on the nature of your data. You can choose to drop rows or columns with missing values, impute the missing values with a specific value or the mean/median, or use more advanced techniques like regression imputation.

import pandas as pd

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Drop rows with missing values

df.dropna(inplace=True)

# Impute missing values with the mean

df['column_name'].fillna(df['column_name'].mean(), inplace=True)

Dealing with Outliers

Outliers are extreme values that deviate significantly from the rest of the data. Depending on your analysis goals, you may choose to remove or transform these values. Common techniques include removing outliers based on a certain threshold or using transformations like logarithmic scaling.

import numpy as np

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Identify outliers using the IQR method

Q1 = df['column_name'].quantile(0.25)

Q3 = df['column_name'].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - (1.5 * IQR)

upper_bound = Q3 + (1.5 * IQR)

# Remove outliers

df = df[(df['column_name'] >= lower_bound) & (df['column_name'] <= upper_bound)]

Feature Engineering

Feature engineering involves creating new features from existing ones to improve the performance of your analysis or machine learning models. This step requires domain knowledge and creativity.

import pandas as pd

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Create a new feature by combining existing columns

df['new_feature'] = df['column1'] + df['column2']

Step 4: Data Analysis and Visualization

With your data set cleaned and preprocessed, you can now perform in-depth analysis and create visualizations to gain insights and communicate your findings.

Statistical Analysis

Python offers a wide range of libraries for statistical analysis, such as statsmodels and SciPy. These libraries provide functions for hypothesis testing, regression analysis, and more.

import statsmodels.api as sm

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Perform a linear regression analysis

X = df[['feature1', 'feature2']]

y = df['target_column']

model = sm.OLS(y, X).fit()

print(model.summary())

Advanced Visualization

Beyond basic visualizations, Python provides libraries like Plotly and Altair for creating interactive and customizable plots. These libraries allow you to create complex visualizations with various chart types and features.

import plotly.express as px

# Read a CSV file into a DataFrame

df = pd.read_csv('data.csv')

# Create an interactive scatter plot

fig = px.scatter(df, x='column1', y='column2', color='category_column')

fig.show()

Best Practices and Tips

When working with data sets in Python, it's essential to follow best practices to ensure efficient and accurate analysis.

- Data Validation: Implement data validation checks to ensure the integrity of your data. This can include validating data types, checking for invalid values, and verifying data consistency.

- Documentation: Document your data sets and the preprocessing steps you perform. This documentation will be invaluable when revisiting your analysis or sharing your work with others.

- Version Control: Use version control systems like Git to track changes in your data sets and analysis code. This helps with reproducibility and collaboration.

- Data Privacy and Security: Be mindful of data privacy and security concerns, especially when working with sensitive information. Ensure that you handle and store data securely.

Conclusion

Python's versatility and powerful libraries make it an excellent choice for working with data sets. By following the steps outlined in this blog post, you can effectively import, explore, clean, and analyze your data. Remember to apply best practices and continuously explore new techniques to enhance your data analysis skills.

FAQ

What is the best way to handle large data sets in Python?

+

For large data sets, consider using libraries like pandas and dask, which are optimized for parallel processing and efficient memory usage. These libraries allow you to work with data sets that are too large to fit into memory.

How can I handle missing values in my data set?

+

There are several strategies for handling missing values, including dropping rows or columns with missing data, imputing missing values with the mean or median, or using more advanced techniques like regression imputation. Choose the approach that best suits your data and analysis goals.

What are some common data visualization libraries in Python?

+

Python offers a variety of data visualization libraries, including Matplotlib, Seaborn, Plotly, and Altair. Each library has its strengths and use cases, so choose the one that best fits your visualization needs and preferences.

How can I share my data analysis results with others?

+

You can share your data analysis results by creating interactive dashboards using libraries like Plotly Dash or Streamlit. These libraries allow you to build web-based applications that display your visualizations and analysis results in an accessible and interactive manner.