In today's data-driven world, the ability to extract and analyze information efficiently is crucial for businesses and individuals alike. Whether you're a data scientist, a marketer, or simply someone curious about data, this guide will walk you through five effective methods to extract data, empowering you to make informed decisions and uncover valuable insights.

1. Web Scraping: Harvesting Data from the Web

Web scraping, also known as web harvesting, is an automated process of extracting data from websites. It involves writing code or using specialized tools to collect and organize information from web pages, which can then be stored and analyzed.

Benefits of Web Scraping

- Accessibility: Web scraping allows you to access and collect data from a vast array of websites, making it an invaluable tool for gathering information from diverse sources.

- Speed: Automated web scraping tools can extract data quickly, saving you time and effort compared to manual data collection methods.

- Flexibility: With web scraping, you can customize your data extraction process to suit your specific needs, targeting specific websites, pages, or even individual elements within a page.

Tools and Techniques

There are numerous tools and programming languages available for web scraping, including:

- Python: A popular choice for web scraping due to its versatility and powerful libraries like

BeautifulSoupandRequests. - R: Ideal for statistical analysis and data visualization, R also offers packages like

rvestfor web scraping. - JavaScript: While primarily used for front-end development, JavaScript can be leveraged for web scraping through tools like

PuppeteerorPlaywright. - Dedicated Web Scraping Tools: Services like import.io and Octoparse provide user-friendly interfaces for web scraping, making it accessible to non-programmers.

Tips for Effective Web Scraping

- Always respect the website's terms of service and robots.txt file to avoid legal issues and potential website bans.

- Use headers and user-agents to mimic browser behavior, making your web scraping requests less suspicious.

- Consider implementing rate limiting to avoid overwhelming the target website with requests.

- Utilize proxies to distribute your requests across different IP addresses, further reducing the risk of being blocked.

2. API Integration: Tapping into Data Sources

Application Programming Interfaces (APIs) are an efficient way to access and retrieve data from various sources. By integrating with APIs, you can directly connect to databases, web services, or platforms and extract the information you need.

Advantages of API Integration

- Real-time Data: APIs often provide access to up-to-date information, ensuring you work with the latest data available.

- Efficiency: API integration eliminates the need for manual data entry, saving time and reducing the risk of errors.

- Scalability: APIs can handle large volumes of data, making them suitable for businesses with extensive data needs.

Common APIs for Data Extraction

- Google APIs: Access a wide range of Google services, including

Google Sheets,Google Analytics, andGoogle Maps, to extract and analyze data. - Social Media APIs: Integrate with platforms like

Twitter,Facebook, andInstagramto gather user-generated content, trends, and insights. - Weather APIs: Extract real-time weather data for your location or specific regions, useful for various applications.

- Financial APIs: Access stock market data, currency exchange rates, and financial news to make informed investment decisions.

Best Practices for API Integration

- Familiarize yourself with the API documentation to understand its capabilities, limitations, and any required authentication methods.

- Use rate limits and caching to optimize your API calls and avoid overwhelming the server.

- Consider implementing error handling mechanisms to gracefully handle API failures and ensure the stability of your data extraction process.

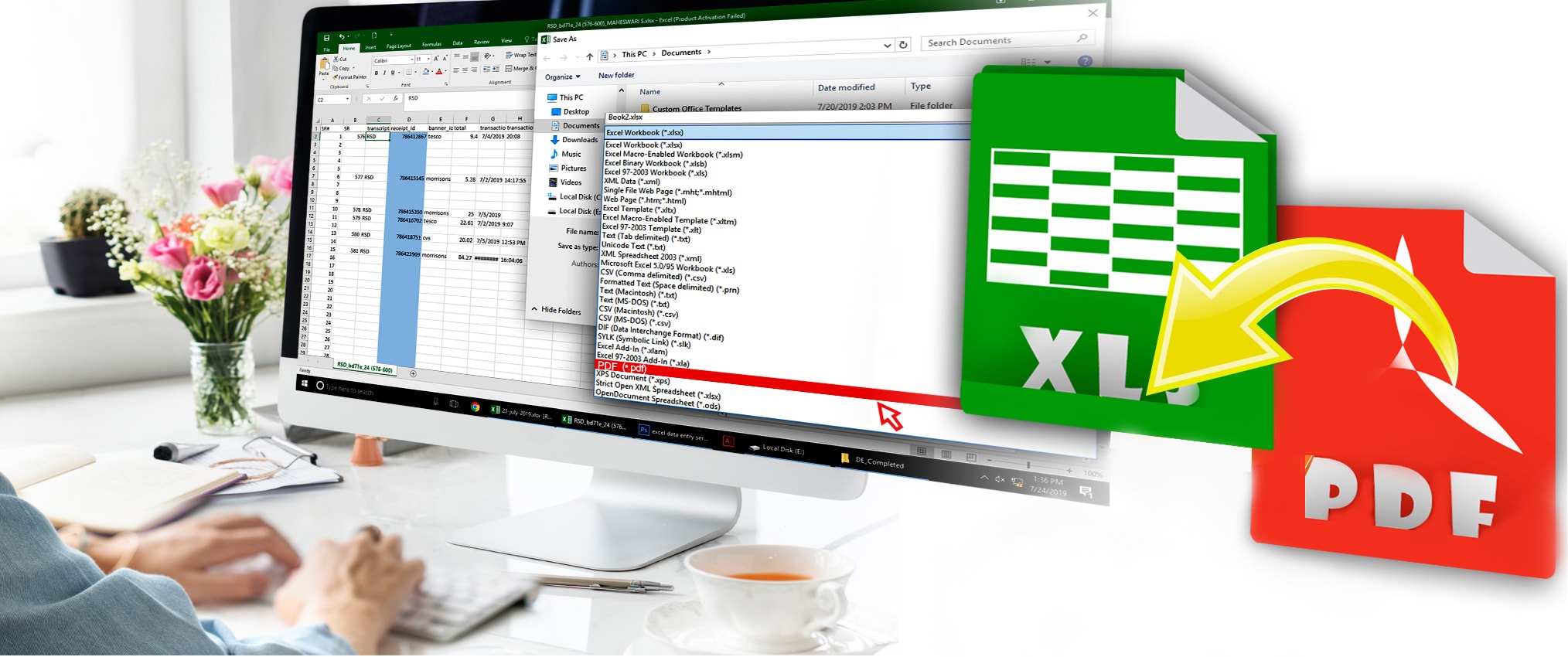

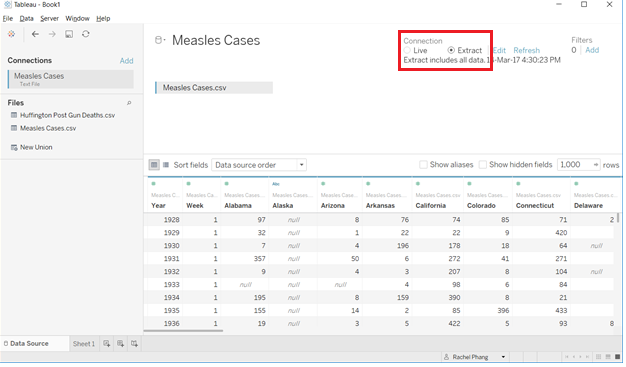

3. Data Extraction Tools: Simplifying the Process

For those who prefer a more user-friendly approach, various data extraction tools are available to streamline the process. These tools often provide graphical interfaces, making it easier to define data sources, set extraction rules, and export the collected data.

Popular Data Extraction Tools

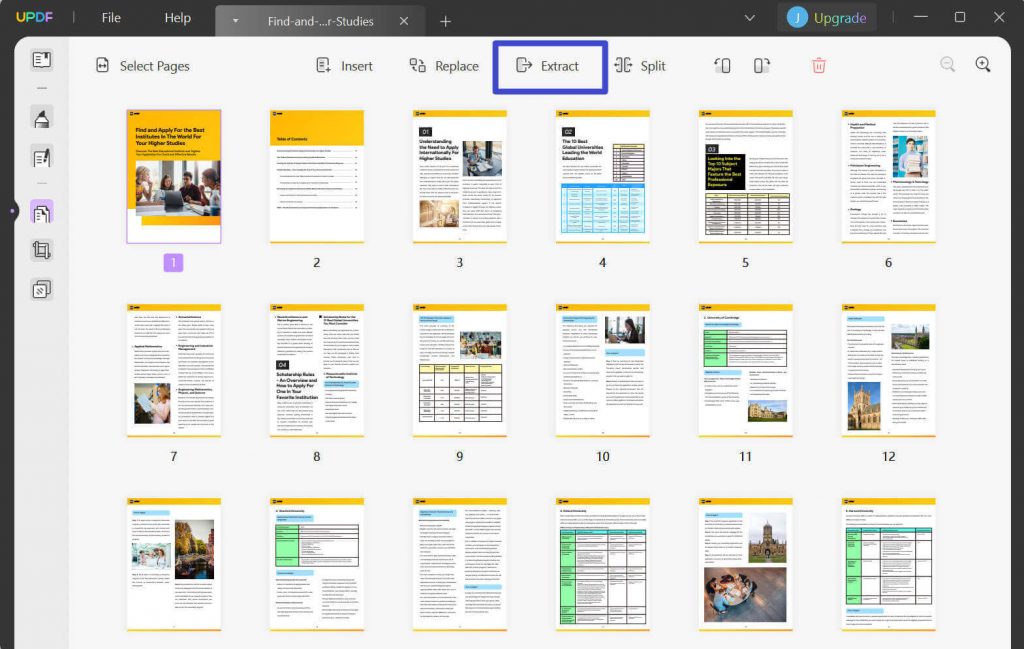

- Tabula: A powerful tool for extracting data from PDF files, offering both a web-based interface and a command-line version.

- Import.io: A versatile platform that allows you to extract data from websites, providing a user-friendly interface and advanced customization options.

- Outwit Hub: An extension for Google Chrome and Firefox, enabling you to extract data from web pages and online documents with ease.

- Scrapy: A fast and versatile web crawling and scraping framework, ideal for large-scale data extraction projects.

Considerations for Choosing a Data Extraction Tool

- Evaluate the data sources you need to extract data from and ensure the tool supports those sources.

- Consider the complexity of your data extraction requirements and choose a tool that can handle your specific needs.

- Look for tools with export options that align with your preferred data storage and analysis methods.

4. Data Scraping with Google Sheets

Google Sheets, a popular spreadsheet application, offers powerful features for data scraping and extraction. With the help of add-ons and formulas, you can automate data extraction from various sources directly into your spreadsheet.

Google Sheets Add-ons for Data Extraction

- ImportHTML: This add-on allows you to extract data from HTML tables directly into your Google Sheet, making it easy to gather and organize information from web pages.

- ImportXML: A more advanced version of ImportHTML, ImportXML lets you extract data using XPath or CSS selectors, providing greater flexibility for web scraping.

- ImportJSON: If your data is in JSON format, this add-on can help you import and organize it into your spreadsheet.

Formulas for Data Extraction in Google Sheets

- IMPORTHTML: Use this formula to extract data from HTML tables, specifying the URL, table number, and column number to retrieve specific data.

- IMPORTXML: With ImportXML, you can extract data using XPath or CSS selectors, making it suitable for more complex web scraping tasks.

- IMPORTDATA: This formula allows you to import data from a variety of sources, including CSV, TSV, and Google Finance, directly into your spreadsheet.

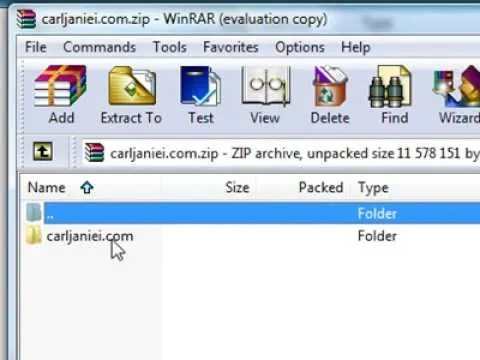

5. Command-Line Data Extraction: Power at Your Fingertips

For those comfortable with the command line, there are powerful tools and utilities available for data extraction. These tools offer flexibility and control, allowing you to customize your data extraction process to a high degree.

Command-Line Tools for Data Extraction

- Wget: A powerful tool for downloading files from the web, including entire websites or specific files, making it useful for web scraping and data extraction.

- Curl: A versatile command-line tool for transferring data, Curl can be used to fetch data from various sources, including HTTP, HTTPS, FTP, and more.

- Xtract: A command-line tool designed specifically for data extraction, Xtract allows you to extract data from various file formats, including PDFs, Excel, and CSV.

Tips for Command-Line Data Extraction

- Familiarize yourself with regular expressions to define patterns and extract specific data from text files or web pages.

- Use text processing utilities like

awk,sed, andgrepto manipulate and filter data, making it easier to extract the information you need. - Consider piping and redirection to chain commands together, allowing for more complex data extraction workflows.

Conclusion

By exploring these five methods of data extraction, you now have a comprehensive toolkit to gather and analyze information from various sources. Whether you choose web scraping, API integration, data extraction tools, Google Sheets, or command-line utilities, each method offers unique advantages and use cases. Remember to respect the terms of service and privacy policies of the websites and services you interact with, and always handle data responsibly and ethically.

FAQ

What is the difference between web scraping and web crawling?

+Web scraping focuses on extracting specific data from web pages, while web crawling involves systematically browsing and indexing web pages for search engines or data collection purposes.

Are there any legal considerations for web scraping?

+Yes, it’s important to respect the terms of service and privacy policies of websites. Always ensure you have the necessary permissions and avoid extracting data for malicious purposes.

Can I use Google Sheets for large-scale data extraction projects?

+While Google Sheets is a powerful tool, it may not be the most efficient for extremely large-scale projects. For such cases, consider using dedicated web scraping tools or programming languages like Python.

What are some common challenges in data extraction?

+Challenges may include dynamic web pages, anti-scraping measures, and constantly changing website structures. It’s important to stay updated with the latest tools and techniques to overcome these obstacles.

How can I ensure the accuracy of extracted data?

+Always validate the extracted data against the original source and implement error-handling mechanisms to catch and address any discrepancies.