Step 1: Define Your Objectives and Scope

Before diving into data extraction, it’s crucial to establish clear objectives and define the scope of your project. Ask yourself: What specific insights or information are you seeking? Which datasets or sources will be relevant to your analysis?

Step 2: Identify Data Sources

The next step is to identify the data sources that align with your objectives. This could include websites, databases, APIs, or even documents. Evaluate the availability, accessibility, and reliability of these sources to ensure they meet your requirements.

Step 3: Assess Data Quality and Compatibility

Data quality is paramount for successful extraction and analysis. Assess the quality of your identified sources by checking for accuracy, completeness, and consistency. Additionally, ensure that the data formats are compatible with your extraction tools and that you have the necessary permissions to access and use the data.

Step 4: Choose Your Extraction Tools

Select the appropriate tools for your data extraction needs. This could range from web scraping software, APIs, or programming languages like Python with libraries like BeautifulSoup or Scrapy. Consider factors like the complexity of the data source, the volume of data, and the frequency of updates when choosing your tools.

Step 5: Set Up Your Extraction Environment

Once you’ve chosen your tools, set up your extraction environment. This may involve installing software, configuring APIs, or setting up virtual environments for coding. Ensure that your environment is optimized for performance and that you have the necessary resources to handle the expected data volume.

Step 6: Extract Data

Now it’s time to extract the data. If you’re using web scraping tools, configure the settings to target the specific data you need. For APIs, make sure you’re using the correct endpoints and parameters. If you’re coding your extraction, ensure that your code is efficient and handles potential errors gracefully.

Step 7: Validate and Clean the Data

After extraction, validate the data to ensure it meets your quality standards. Check for errors, inconsistencies, and missing values. Clean the data by removing duplicates, formatting inconsistencies, and irrelevant information. This step is crucial to ensure the accuracy and reliability of your analysis.

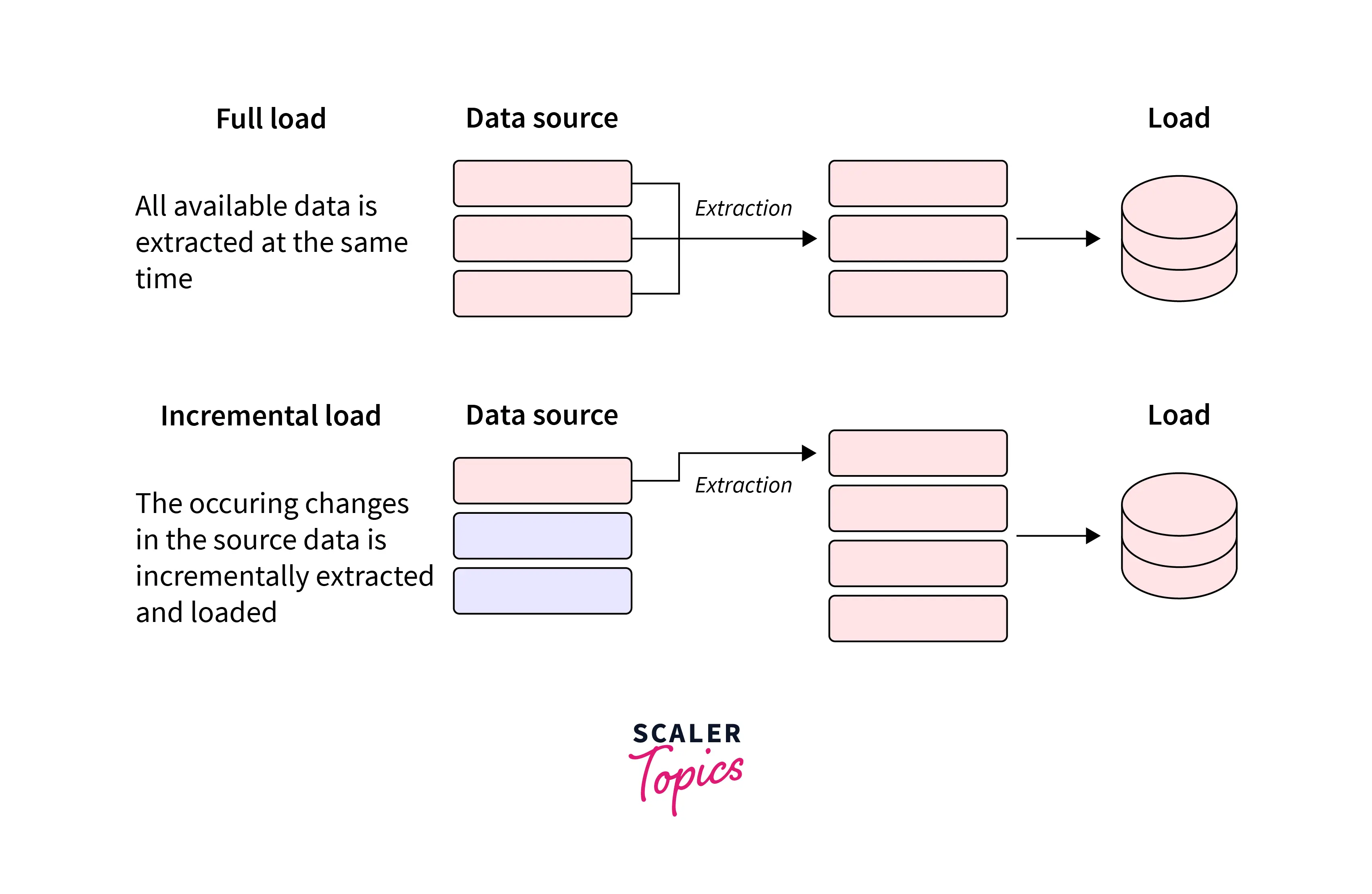

Step 8: Store and Organize the Data

Choose an appropriate storage solution for your extracted data. This could be a database, a cloud storage service, or a simple file system. Ensure that your storage solution is secure, scalable, and accessible to authorized users. Organize the data in a way that facilitates easy retrieval and analysis.

Step 9: Analyze and Visualize the Data

With your data cleaned and organized, it’s time to analyze and visualize it. Use data analysis techniques and tools to uncover insights and patterns. Create visualizations like charts, graphs, or maps to communicate your findings effectively. Ensure that your analysis is aligned with your initial objectives.

Step 10: Automate and Schedule Extractions

To ensure continuous access to fresh data, consider automating your extraction process. Schedule regular extractions to keep your data up-to-date. This step is particularly important for dynamic data sources like websites or APIs that frequently update their content.

Conclusion:

By following these 10 steps, you can confidently embark on your data extraction journey. Remember, data extraction is an iterative process, and you may need to refine your approach as you gain more insights. Stay adaptable, and don’t be afraid to explore new tools and techniques to enhance your data extraction capabilities.

FAQ Section:

What is data extraction, and why is it important?

+

Data extraction is the process of retrieving data from various sources for analysis and insights. It’s important because it enables businesses and researchers to make informed decisions based on accurate and up-to-date information.

How often should I update my extracted data?

+

The frequency of updates depends on the nature of your data sources. For dynamic sources like social media or news websites, daily or even hourly updates might be necessary. For more static sources, weekly or monthly updates could suffice.

What are some common challenges in data extraction?

+

Common challenges include data quality issues, complex data structures, and changing data sources. It’s important to have a robust data validation and cleaning process to address these challenges effectively.

Can I use data extraction for personal projects or small businesses?

+

Absolutely! Data extraction is a powerful tool for personal projects and small businesses. It can help you gain valuable insights from your data, improve decision-making, and stay competitive in your industry.

What are some best practices for data extraction security?

+

Ensure you have proper access controls, encrypt sensitive data, and regularly monitor your extraction processes for potential security breaches. Additionally, be mindful of data privacy regulations like GDPR or CCPA.